Choosing between Fabric Dataflows or Pipelines

Dataflows

In my previous blog, I discussed some considerations when choosing between a Fabric lakehouse or warehouse in your data architecture. Continuing on the topic of choosing resources in Microsoft Fabric, I will lay out some considerations when deciding between dataflows and data pipelines in Fabric's data factory experience.

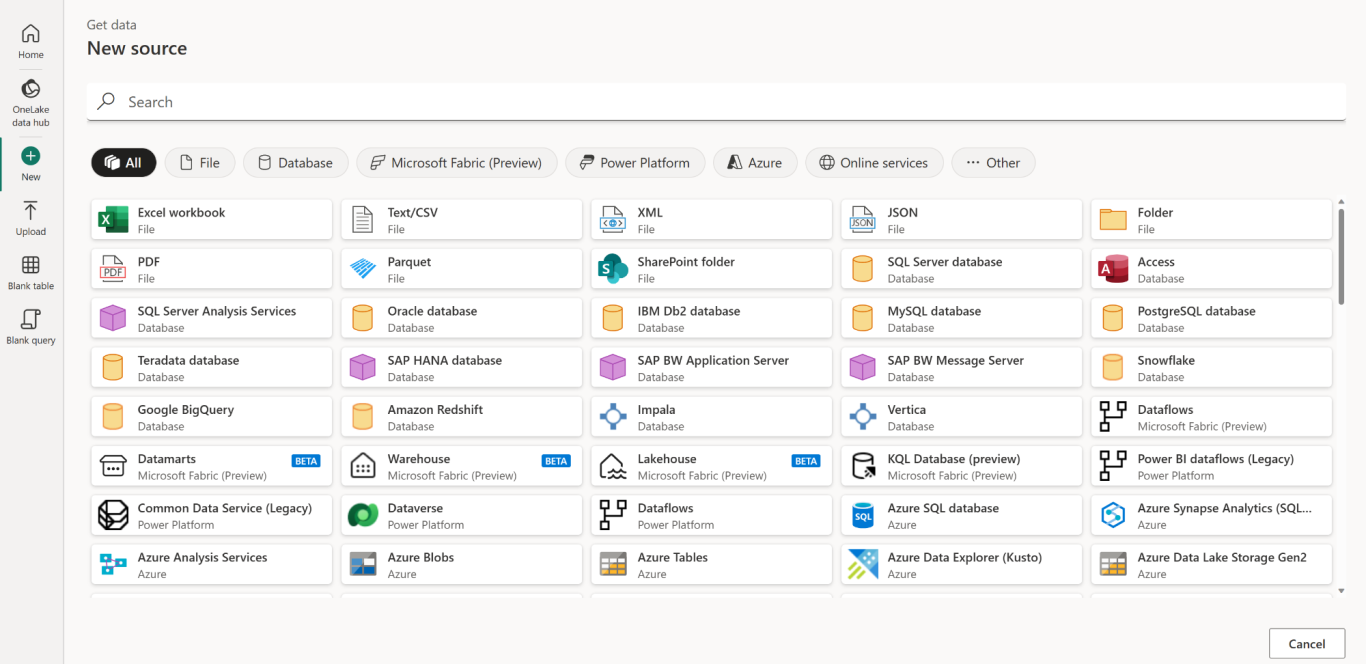

Dataflows in Microsoft Fabric provide a low code option for data preparation and transformation. They allow business users to use hundreds of out of the box connectors to connect to both on-premises and cloud data sources. Dataflows leverages the Power Query online interface to give end-users a friendly UI experience when performing their data preparation or transformation activities. I consider Dataflows in Microsoft Fabric to be a near equivalent of mapping dataflows in Azure Data Factory. You can read more on the similarities here.

Data Pipelines

Data pipelines provide end-users with a robust, scalable solution for complex data workflows and large-scale data processing. Use data pipelines for orchestrating and automating your data movement and transformation activities. Data pipelines offer advanced scheduling, monitoring, and error handling capabilities. Data pipelines allow you to call other Fabric items in your data processes such as notebooks, stored procedures, dataflows, and even invoke other nested data pipelines. In my opinion, data pipelines offer a very similar experience to Azure Data Factory pipelines, though some differences still exist. Data pipelines in Fabric offer many of the same of the activities as Azure Data Factory pipelines, including some new capabilities such as integrated Outlook and Teams notifications and semantic model refresh.

Data pipeline activity reference

Data pipeline connector reference

Data Scenarios

The following section will walk through some common data scenarios and the recommended data factory solution in Microsoft Fabric.

Scenario 1

I am a business user who has gained access my organization's enterprise data model. I need to incorporate an additional data source and a few tables from that data source into my new Power BI report. I want the ingestion and transformation steps of these tables to be automated and the data to be refreshed daily. I do not have much coding experience and am looking for a low-code/no-code experience for my data ingestion and transformation requirements. This new data source is not scheduled to be incorporated into the enterprise data model for some time and the new Power BI report needs to be ready by next week.

Recommendation: Dataflows

Explanation: Dataflows provide a low-code option for data ingestion and transformation. With support for hundreds of data connectors and over 300+ data transformations, it's likely Dataflows supports an out of the box connection to my new data source. Dataflows offers a friendly end-user experience that requires little to no code through Power Query online. I am also able to schedule and refresh my data daily through the scheduled refresh capabilities in Dataflows.

Scenario 2

I am a data engineer working in my organization's data analytics team. I have been tasked with creating a process that handles large-scale ETL activities across multiple data sources. I want to limit the number of data factory items I need to manage in this solution. My manager also requires the solution to include robust monitoring, and alerting capabilities.

Recommendation: Data Pipelines

Explanation: Data pipelines provide a flexible and scalable solution, allowing end-users to monitor and send customized alerts for their pipeline runs. Data pipelines allow you to scale your solution, leveraging techniques such as a metadata framework, which enables repeatable ingestion and transformation activities across your pipeline process.

Scenario 3

I am a business analyst, and I have been tasked with creating a report that requires data from an on-premises SQL server in my organization. The report also requires data from an Azure Data Lake storage account that has a private endpoint.

Recommendation: Dataflows

Explanation: Dataflows offer support for both the on-premises data gateway and a virtual network data gateway. In this scenario I can setup a connection to the on-premises SQL server via the on-premises data gateway along with a data connection to the ADLS account via the VNET data gateway to securely ingest my data and use it in my Power BI report. Currently, data pipelines do not support the VNET data gateway. Though, I presume the VNET data gateway for data pipelines in Fabric will be supported sometime in the future.

Scenario 4

I am a data engineer, and I have been tasked with creating a process that loads and updates our organization's data warehouse environment in Fabric. The data analytics team has existing Fabric dataflows and notebooks that need to be leveraged in the process. One final requirement is that the organization's enterprise semantic model needs to be refreshed after the data warehouse's tables have been updated. The solution also requires a single data factory item to be scheduled on a daily basis.

Recommendation: Data Pipelines

Explanation: Data pipelines offer the ability to trigger Fabric objects such as dataflows or notebooks as a pipeline activity. Data pipelines also offer the ability to complete a semantic model refresh without having to code that request via an API call. Finally, data pipelines offer the ability to nest and invoke "child" data pipelines in a single master or orchestrator like pipeline, streamlining the set-up of the pipeline run frequency.

Scenario 5

I am a data engineer, and I have been tasked with ingesting large amounts of data from multiple cloud databases. This source data will be used by my data analytics team to create our new central data warehouse. The solution needs to be highly performant and scalable.

Recommendation: Data Pipelines*

Explanation: While dataflows offer the performant fast copy activity, data pipelines offer a similar highly performant copy data activity. Data pipelines offer a much more scalable and flexible solution and are better positioned for more enterprise workloads, in my opinion. Dataflows are not positioned today, to scale in the same way data pipelines are, thus making data pipelines the clear choice for large and complex data workflows. Another option could be to use both dataflows and data pipelines in this scenario. Where one or more dataflows are created to perform the data ingestion, and a data pipeline is used to orchestrate and schedule the dataflow(s).

Conclusion

In general, data pipelines offer more robust and enterprise scale capabilities over dataflows. My general recommendation would be to use data pipelines whenever possible in your data architecture within Fabric. However, dataflows do have their place in your organization's data ecosystem, especially in self-service analytics scenarios. Business users who are looking for low-code/no-code experiences that require managed data assets, may look to dataflows to solve these needs in the short-term.

For those of you looking to get started in your data factory journey within Microsoft Fabric, here are some learning resources.

- Data Factory in Microsoft Fabric Documentation

- Use Data Factory Pipelines in Microsoft Fabric

- Ingest Data with Dataflows in Microsoft Fabric

I appreciate you taking the time to read this blog post. I hope this helped paint a clearer picture in deciding when to use dataflows vs data pipelines in Microsoft Fabric. Happy continued learning!

© Copyright. All rights reserved.

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.